Growing up, Pratik Chaudhari, Assistant Professor in Electrical and Systems Engineering (ESE) and member of the General Robotics, Automation, Sensing and Perception (GRASP) Laboratory, just wanted to build robots.

After starting in college with wheeled robots, he moved on to hopping robots, before graduating as a master’s student to self-driving cars, at which point he realized that teaching robots to navigate roads on their own was the easy part — the hard part was teaching them to respond to the other drivers around them. “Perception is the bottleneck to autonomy,” Chaudhari says, and while the cars had decent sensors, they didn’t always know what to look at, or how to interpret what they saw.

In graduate school, Chaudhari pursued a Ph.D. in Computer Science with a focus on deep learning, a field of artificial intelligence that employs multiple layers of computation (hence the “deep” in “deep learning”) to emulate the working of biological brains. “The biological brain is a prediction machine,” Chaudhari says. “It uses past data, identifies patterns within it, and uses those patterns to extrapolate how future data will look.”

At the time, Chaudhari says, researchers were beginning to look at deep learning as a promising avenue for teaching machines to interpret visual data. “Using massive datasets, we might help machines uncover salient patterns that are sufficient for visual perception.”

Today, Chaudhari’s lab at Penn Engineering draws on disciplines as diverse as physics and neuroscience to understand the process of learning itself. The ultimate goal is to discover the principles that underlie learning in both artificial and biological systems, so that engineers can harness those principles in the machines they design. “I would be very happy as a researcher if I could build a machine that can do most things that your pet dog can do,” Chaudhari says.

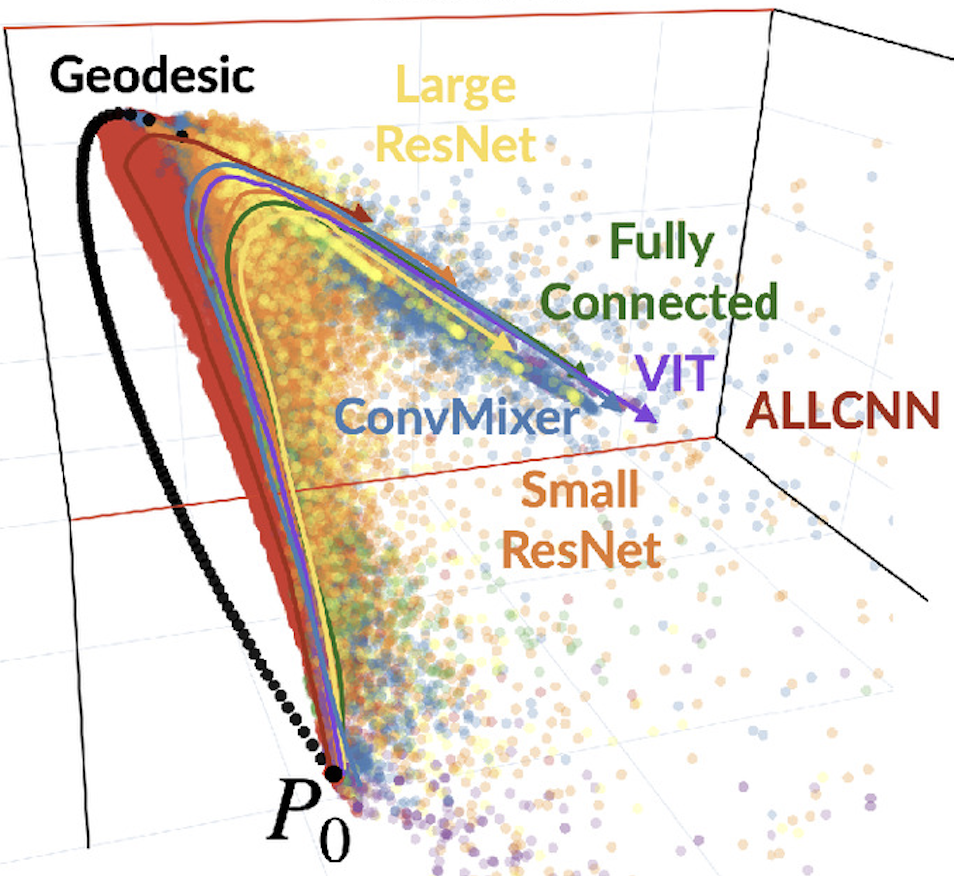

Recently, Chaudhari co-authored a paper showing that many of the past decade’s neural networks — computing systems inspired by the structure of biological neurons that perform many simple computations to accomplish larger tasks — learn to classify images in the same manner, no matter their size or design, when trained on the same data. “Problems that we thought of as distinct, like classifying images of different birds, or animals or vehicles, turn out to be spookily similar,” Chaudhari says.

The result hints at the possibility of designing hyper-efficient algorithms that leverage this commonality to classify images in a fraction of the time, at a fraction of the cost required to train neural networks today.

Already, algorithms Chaudhari has developed in deep learning have received interest across a number of industries and applications. “We have developed very effective techniques to train deep networks and eke out more performance from the data,” says Chaudhari.

Over the past few years, for instance, his group has been focusing on building systems that can learn many different tasks with very little input. “Suppose you are a farmer in Pennsylvania who grows strawberries,” he says, “and you want a machine that classifies different kinds of strawberries. You might not appreciate a computer scientist telling you that they need 1000 labeled samples to get started.”

To tackle these problems, Chaudhari has recruited a research group that includes both undergraduate and graduate students from a range of departments and disciplines. As he sees it, having a diverse array of researchers leads to better results. “Undergraduates are exceptional at out-of-the-box thinking,” he says, “perhaps because they don’t know where the box is!”

In addition to conducting research and mentoring graduate and undergraduate students, Chaudhari also teaches ESE 546: Principles of Deep Learning, which will be offered as an elective in the newly announced Raj and Neera Singh Program in Artificial Intelligence.

The course brings students to the frontier of deep learning, at times introducing them to material so new that, as Chaudhari puts it, “I could give you a homework problem after four weeks in this class, and it would be at the edge of what we know in the field.”

As a teacher, Chaudhari’s goal is to help students understand not just today’s AI technologies, but also the foundational ideas that will underpin tomorrow’s systems. “Convolutional neural networks were very popular a few years ago,” he says, “and now transformers are popular — five years from now, it will be something else, but the principles that power these systems are the same. And if you know the principles, you will not only be able to understand new techniques, you might also be able to discover better ones.”

To learn more about Pratik Chaudhari and his research, please visit his website. In the fall term, Chaudhari teaches ESE 5460: Principles of Deep Learning and in the spring term, he teaches ESE 6500: Learning in Robotics.